Where To Look: Focus Regions for Visual Question Answering

Kevin J Shih, Saurabh Singh, Derek Hoiem

|

Abstract

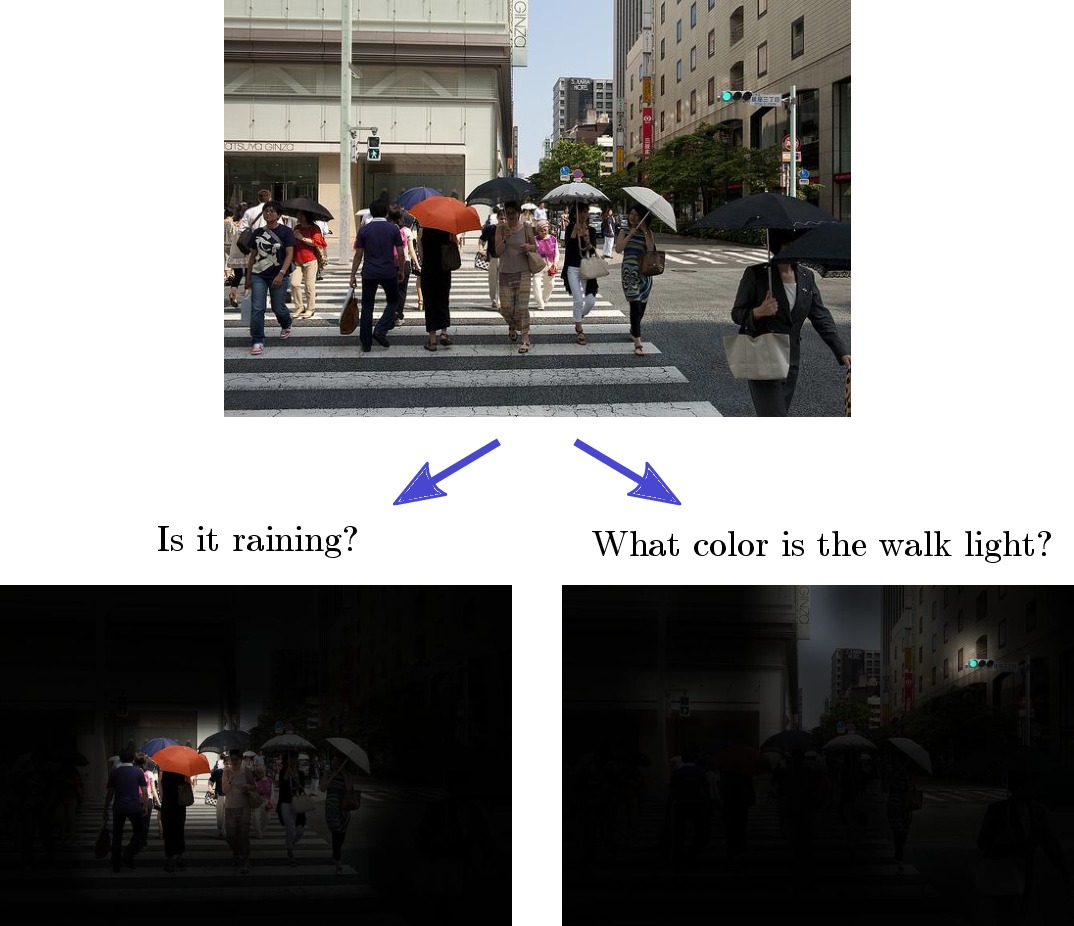

We present a method that learns to answer visual questions by selecting image regions relevant to the text-based query. Our method maps textual queries and visual features from various regions into a shared space where they are compared for relevance with an inner product. Our method exhibits significant improvements in answering questions such as ‘‘what color,’’ where it is necessary to evaluate a specific location, and ‘‘what room,’’ where it selectively identifies informative image regions. Our model is tested on the recently released VQA dataset, which features free-form human-annotated questions and answers. |

Code

Paper

Where To Look: Focus Regions for Visual Question Answering

Kevin J Shih, Saurabh Singh, Derek Hoiem

CVPR 2016

[arXiv link]

[Poster]

Results on test-standard real-mc

| Model | Yes/No | Number | Other | Overall |

| wtl | 77.18 | 33.52 | 56.09 | 62.43 |

| wtlv2 | 78.08 | 34.26 | 57.43 | 63.53 |

Note: wtlv2 features a widened fully connected layer and was the model uploaded to the leaderboard. It is also the model specified in the released code.

BibTeX

@inproceedings{shih2016wtl,

author = {Kevin J. Shih and Saurabh Singh and Derek Hoiem},

title = {Where To Look: Focus Regions for Visual Question Answering},

booktitle={Computer Vision and Pattern Recognition},

year = {2016}

}